Table of Contents

The Quiet Storm Brewing Around India AI Laws

Until recently, conversations around artificial intelligence (AI) in India have mostly focused on innovation—startups, unicorns, and endless possibilities. But as the tech matures and AI tools seep into our daily lives—think ChatGPT, facial recognition at airports, and AI-powered recruitment—India finds itself at a critical inflection point.

India AI laws are no longer just a theoretical conversation among policymakers. They are becoming a living reality, with legal implications for developers, businesses, and even everyday citizens. And yet, as someone who works in the tech content space, I’ve often noticed one thing missing in this conversation: clarity.

We hear buzzwords like “responsible AI,” “ethical AI,” and “privacy,” but what do India AI laws actually say? What happens when an AI system misbehaves? Or when facial recognition gets it wrong? Or when an algorithm unknowingly discriminates based on caste, gender, or religion?

In this article, we’ll walk through real facts, current drafts, case studies, and commentary from people who’ve been on the ground building or battling these systems. The goal? To decode India’s approach to regulating AI without the jargon. Because when it comes to India AI laws, we’re all stakeholders—whether we write code, click “accept,” or simply scroll Instagram.

The Legal Vacuum: India’s Current AI Law Landscape

Here’s the strange truth: India AI laws do not exist in the form of a singular, codified act—yet.

As of April 2025, there is no dedicated AI law passed by the Indian Parliament. Instead, AI is indirectly governed by existing laws such as:

The Information Technology Act (2000) – Mainly addresses cybercrimes and data breaches.

The Personal Data Protection Bill (2023) – Focuses on how data (which fuels AI) is collected, stored, and shared.

Consumer Protection Act (2019) – Can potentially hold companies liable if an AI-driven product or service causes harm.

However, none of these explicitly target the unique challenges of AI—bias in algorithms, autonomous decision-making, or explainability. And that’s a big deal.

Imagine this: A resume-screening AI filters out applicants based on surnames associated with certain castes. Is it illegal? Possibly. But under which law? And who’s responsible—the company, the developer, or the algorithm itself?

Until India AI laws offer answers to such questions, enforcement remains inconsistent and reactive.

Draft Frameworks and What They Reveal

Even without formal laws, India has started working on frameworks to guide AI development. The most notable one is the “Responsible AI for All” strategy released by NITI Aayog.

Key features include:

Fairness – AI systems must avoid discriminatory outcomes.

Transparency – AI decisions should be explainable to users.

Accountability – Clear guidelines on who is liable if an AI system causes harm.

Privacy – AI must respect user data rights.

Though not legally binding, this strategy shows the direction India AI laws might take in the near future. It emphasizes self-regulation over hard policing. But is that enough?

Critics argue that without enforceable regulations, ethical frameworks are just wish lists. And they’re not wrong. In 2023, an AI-powered facial recognition system deployed at a railway station misidentified several passengers as criminals—causing detainments and panic. No clear accountability followed.

So, while frameworks are a good start, they can’t substitute real India AI laws with legal teeth.

Learning from Global Case Studies

India isn’t the only country dealing with AI’s legal dilemmas. Let’s look at a few examples that are already shaping the debate here.

1. The EU’s AI Act (2024)

The European Union passed a groundbreaking AI Act classifying AI systems into categories based on risk—minimal, limited, high, and unacceptable. For example, AI used in biometric surveillance is considered “high-risk” and subject to strict rules.

India is watching closely. In fact, a 2024 internal report by MeitY (Ministry of Electronics and IT) proposed adopting a similar risk-based approach in future India AI laws.

2. The United States – Sectoral Regulation

The US has taken a different route—regulating AI within sectors like healthcare, finance, and defense. The FDA already evaluates AI-driven medical tools. This could serve as a model for India’s fragmented legal system.

Case in point: In 2022, an AI-assisted health app misdiagnosed cancer risk in a Delhi clinic. The absence of India AI laws meant no standard audit or investigation could be mandated.

These global cases underline one truth—India needs to tailor its AI laws, but fast.

The Ethics Question: Who’s Responsible When AI Fails?

Let me share something personal. In 2023, I built a small AI script to auto-generate product descriptions for my client’s eCommerce store. It worked fine—until one day it labeled a saree as “cheap knockoff” instead of “affordable traditional wear.” The client was furious. I had to explain it was a model hallucination.

Now imagine this same glitch happens at scale—in healthcare or criminal justice. India AI laws need to define clear guidelines on who is responsible: the creator, the deployer, or the platform.

Some proposals suggest algorithmic audits—where any AI tool above a certain user base or impact level must go through regular third-party checks. Think of it as “AI pollution control.”

Others want AI liability insurance—where companies must compensate victims if their AI causes harm.

But until these are codified into proper India AI laws, accountability remains a grey area. And grey is dangerous.

Bias in the Machine: A Hidden Danger

AI systems are trained on data. And data, especially in India, carries the weight of history—of caste, gender, region, and language.

In 2024, a study by IIIT Hyderabad found that a Hindi-to-English AI translation system consistently translated male-dominated professions like “engineer” as “he” and home-based ones like “cook” as “she.” This wasn’t intentional bias—it was embedded bias.

India AI laws must recognize these subtle dangers and introduce:

Bias testing mandates

Diversity in training data

Human oversight panels for critical use cases

After all, AI doesn’t “think”—it reflects. And if the data is flawed, the reflection is distorted.

Innovation vs Regulation: A Balancing Act for Indian Startups

India’s tech ecosystem is booming. In 2024 alone, over 900 new AI startups emerged, according to Tracxn data. From AI-powered diagnostics in Tier-2 cities to deep-learning tools for agriculture, innovation is no longer limited to metros.

But this explosion of AI development also brings friction.

Startups argue that early enforcement of India AI laws could stifle innovation. Regulations often mean compliance costs, legal teams, and slower time-to-market. For a bootstrapped founder trying to ship an MVP, these hurdles can feel overwhelming.

Yet, the lack of legal clarity is equally risky. Imagine launching an AI-based child education tool and later realizing your model trained on copyrighted textbooks without permission. Without proper India AI laws, you’re left navigating a minefield of legal gray zones.

This is the paradox—too much regulation too soon might kill innovation, but too little leads to chaos and mistrust.

What India needs now is a tiered approach: Start with light-touch compliance for early-stage companies, and gradually scale up as the product reaches mass adoption. Think of it like seatbelts: optional for prototypes, mandatory for production cars.

The Role of MeitY: What the Government is Planning

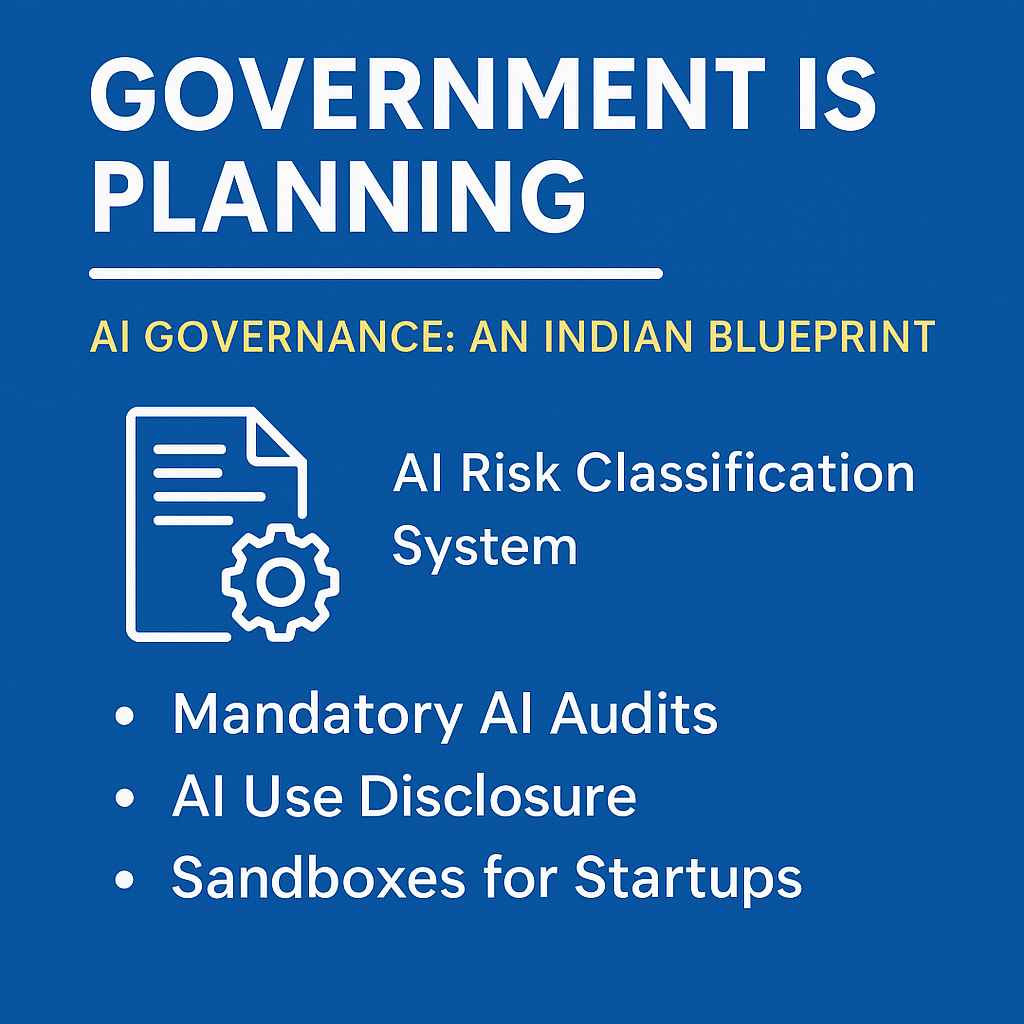

The Ministry of Electronics and Information Technology (MeitY) is leading the charge toward structured AI governance. In late 2024, MeitY released a white paper titled “AI Governance: An Indian Blueprint.”

Here are the key proposals outlined for future India AI laws:

1. AI Risk Classification System – Similar to the EU model, it defines AI tools into three levels: Low-risk (chatbots), Medium-risk (ecommerce personalization), High-risk (surveillance, healthtech, finance).

2. Mandatory AI Audits – High-risk applications must undergo regular third-party audits for fairness, accuracy, and privacy protection.

3. AI Use Disclosure – Any platform using AI must disclose to the end-user that decisions or recommendations are made by algorithms.

4. Sandboxes for Startups – Startups can test their models in controlled environments without fear of legal action, encouraging safe innovation.

These are not yet India AI laws, but they lay the groundwork. And importantly, they aim to keep India’s AI ecosystem both competitive and compliant.

The Lived Reality: Stories from the Ground

Let’s step away from policy papers for a moment and look at how the lack of India AI laws plays out in real life.

Case 1: The Bank Loan Bot

A fintech startup in Mumbai launched a chatbot that helped users get instant approval for microloans. Great product. But it unknowingly rejected more women applicants than men. Turns out, the training data had fewer female profiles marked “creditworthy,” reinforcing the bias.

There were no penalties because no law was broken—only ethics. The company fixed the bug, but the damage to trust was done.

Case 2: AI in Policing

In 2023, Hyderabad Police began using facial recognition software to track suspects in public places. Within months, reports emerged of false positives, especially among darker-skinned individuals. One 19-year-old was mistakenly arrested twice.

This led to public outcry but no formal accountability—again, because India AI laws do not yet address automated surveillance.

These stories show that regulation isn’t about slowing down AI—it’s about building a system people can trust.

Industry Pushback and Lobbying

Not everyone’s a fan of fast-tracked AI regulation.

Major industry players—from edtech to ride-hailing—have pushed back, citing the complexity of building explainable AI. Their concern is that if India AI laws are too strict, global players might find the Indian market less attractive.

A leaked memo from a major ride-sharing company in 2024 stated:

> “If AI audits are made mandatory, it will increase cost per user by 14–17%. We recommend voluntary ethics disclosures instead.”

This raises an important debate: Should India favor growth or governance?

In my opinion as a writer and observer in the tech space, a hybrid model makes the most sense. The law can’t be a straightjacket, but it can’t be absent either. Guardrails, not gates.

AI and Employment: The Human Cost

AI in India isn’t just changing how we code or market—it’s reshaping livelihoods.

By the end of 2025, industry analysts expect AI to automate up to 15 million jobs in areas like customer service, data entry, and basic analytics. While AI creates new opportunities too, the transition period is going to be painful.

India AI laws must also consider social impact. Some proposals include:

Reskilling mandates – Companies that deploy AI at scale must invest a portion of savings into upskilling their workforce.

AI Impact Reports – Public companies may be required to disclose how AI adoption affects employment annually.

These are not yet enacted, but they highlight a growing realization: regulation must also protect people, not just prevent harm.

Tech for Good: AI That Needs Legal Boosts

Not all AI use cases are problematic. Many are solving real challenges—from detecting TB in rural India to predicting crop diseases using satellite images.

But without supportive India AI laws, these tools often get stuck in pilot phases. Hospitals hesitate to adopt AI diagnostics fearing legal liabilities. Schools are unsure if AI-based grading violates exam board norms.

If India wants AI for social good, laws must not only prevent harm but enable trust.

That means:

Pre-approved AI models for public use

Clear consent protocols in healthcare

Government-backed liability protection for nonprofit use cases

Why I Care About This

I’m not a lawyer. I’m not a policy researcher. I’m someone who’s seen AI from the inside out—as a user, builder, and writer. And I’ve learned one thing: unregulated power is not neutral.

In 2022, I created a basic AI script that analyzed YouTube thumbnails and tried to predict which ones would go viral. It was fun—until I realized it almost never selected women-led videos. I hadn’t coded that bias in, but it was there.

It made me uncomfortable. It made me question everything I thought I understood about tech. That’s when I started reading up on India AI laws—and quickly found there weren’t many.

That’s why I’m writing this article. Not to tell you what to think. But to start a conversation that we can’t afford to delay anymore.

What Should India Prioritize in Its AI Legal Journey?

Now that we’ve seen the spectrum—innovation, risk, case studies, and global examples—let’s break down what should be India’s realistic priority list when shaping AI regulations.

1. Clarity First, Complexity Later

The first draft of India AI laws should not aim to solve everything. Just like the IT Act 2000 evolved over time, AI laws should start simple. Clear definitions of liability, fairness requirements, and mandatory AI disclosures are a good starting point.

2. Risk-Based Regulation

Not every AI tool needs the same scrutiny. A recommendation engine for movies shouldn’t be treated like an AI diagnosing cancer. India AI laws should adopt a tiered compliance model—light for low-risk tools, tight for high-risk systems.

3. Support for Ethical Startups

Imagine a law that rewards ethical AI behavior. Startups that publish open audits or participate in regulatory sandboxes could get tax credits or fast-track approvals. This makes regulation a carrot—not just a stick.

4. Citizen Awareness

Without public understanding, regulation is hollow. Most people don’t even know when AI is being used on them. India AI laws must mandate disclosure and digital literacy campaigns in local languages.

Frequently Asked Questions (FAQs) About India AI Laws

Q1. Does India have any official AI law as of 2025?

No. There’s no standalone law yet. Current AI operations are guided by existing data, IT, and consumer laws. However, policy papers by MeitY and NITI Aayog are actively shaping upcoming India AI laws.

Q2. Will the future India AI laws ban facial recognition?

Not likely. The government uses facial recognition for policing and verification. However, new laws may regulate its use, especially regarding consent, accuracy, and redressal in case of false identification.

Q3. What is the government doing to regulate harmful AI algorithms?

MeitY is working on a risk classification system, mandatory AI audits for high-risk tools, and accountability norms—all expected to become part of formal India AI laws soon.

Q4. How will India AI laws impact startups?

Startups may face compliance costs if building high-risk tools. However, policies like regulatory sandboxes are being considered to support safe testing without full-scale legal risk.

Q5. Can I sue a company if their AI harms me?

Right now, it depends. You may need to file a case under the IT Act or Consumer Protection Act. India AI laws, once formalized, are expected to define clearer liabilities for such situations.

Q6. Will AI laws affect common apps like Instagram filters or shopping bots?

Only if those tools fall into “medium” or “high-risk” categories. Most personal-use AI tools are likely to stay under light-touch regulation unless they collect sensitive data.

Q7. What role will ethics play in India AI laws?

A major one. Laws are expected to incorporate guidelines on fairness, privacy, explainability, and consent. But enforcement is the real challenge.

Time Is Ticking for India AI Laws

India is not too early, nor too late to act. The country has a unique advantage—it can learn from the mistakes and successes of others, like the EU or US, while also crafting something that fits our cultural and economic context.

But for that to happen, we need more than just policymakers. We need coders, founders, consumers, teachers, journalists—everyone—to join the conversation.

As someone who works in tech and storytelling, I’ve seen firsthand what unregulated AI can do. It’s not always malicious. Sometimes it’s careless. And sometimes, it’s simply overlooked. That’s exactly what India AI laws are meant to fix—not by slowing us down, but by keeping us on track.

It’s time we stop asking “Will India regulate AI?” and start discussing how India can lead AI regulation, the right way.

Leave a Reply